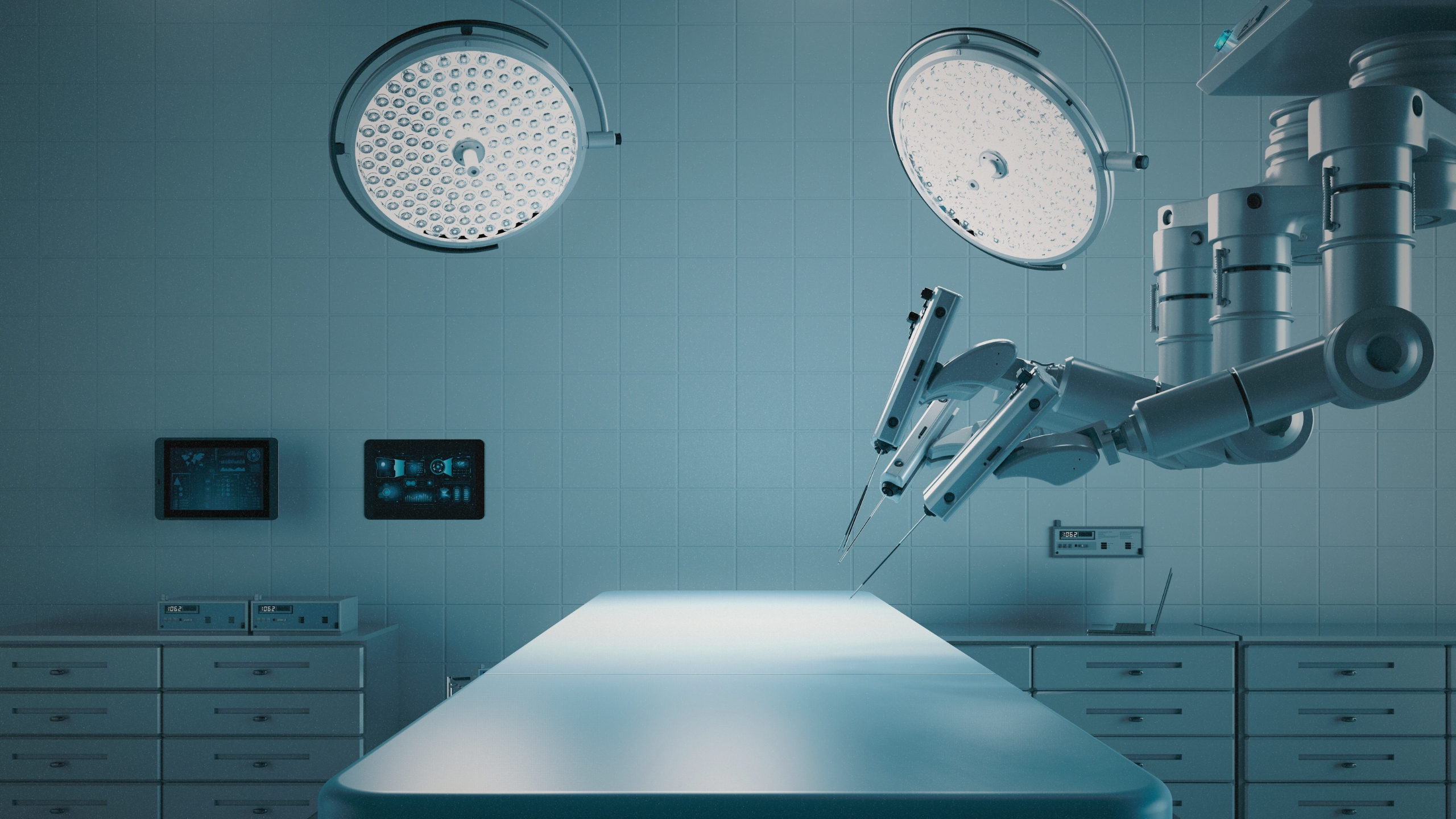

The integration of artificial intelligence (AI) into healthcare has introduced unprecedented opportunities for improving patient outcomes, but it has also raised complex legal and ethical challenges. From AI-powered diagnostic tools to robotic surgeries, healthcare providers are increasingly relying on machine learning algorithms to support clinical decisions.

However, as these systems grow in sophistication, so too do the risks associated with their misuse or failure. For attorneys handling medical malpractice claims, the rise of AI requires a new framework for evaluating negligence, liability, and the evolving standard of care. Here, we explore how AI is reshaping the legal landscape of medical malpractice, with a particular emphasis on ethical dilemmas, potential pitfalls, and what healthcare providers and patients need to know.

Evolving Standards of Care & Liability Risks

AI has begun to alter what is considered a reasonable standard of care in medical settings. Traditionally, the standard of care has been determined by what a reasonably competent physician would do under similar circumstances. However, as AI becomes more accurate and widely available, litigators may begin to argue that physicians were negligent for underutilizing advanced AI tools. Conversely, when doctors do use AI and adverse outcomes occur, questions arise about whether it was appropriate to rely on those systems at all.

A recent study by Johns Hopkins Carey Business School found that physicians are more likely to consult AI in straightforward cases but tend to avoid it in complex scenarios where outcomes are less predictable, primarily due to malpractice concerns. This trend demonstrates the growing influence AI has on clinical behavior and raises a critical question: If AI is good enough to be consulted, when does it become negligent not to use it? On the other hand, if AI is consulted and provides incorrect advice, does the fault lie with the machine, the physician, or the developers of the technology? These liability gray areas make medical malpractice claims involving AI particularly difficult to navigate, requiring new legal theories and precedent to determine fault.

Ethical Concerns Using AI

The use of AI has also prompted a reexamination of long-standing ethical principles in healthcare, particularly with respect to patient autonomy, trust in the doctor-patient relationship, and the moral obligations of healthcare providers.

Informed Consent

Informed consent is one such principle under strain. Traditionally, patients are entitled to understand the risks and benefits of any proposed treatment, but when AI plays a significant role in shaping that treatment, it becomes difficult to explain what the patient is consenting to. Without clear disclosure of how AI is used and what data it is trained on, patients may unknowingly agree to decisions they do not fully understand.

Patient-Physician Trust

Trust is another area of concern. Many patients expect a human touch that includes empathy, compassion, and nuance in their care. But, as AI systems increasingly handle diagnosis and treatment recommendations, there is a risk that the role of the physician will become more mechanical and less relational. The erosion of this trust could undermine the effectiveness of treatment and reduce patient satisfaction.

Potential Failures of Using AI in Healthcare

The integration of artificial intelligence into healthcare has introduced new avenues for medical error, spanning from human missteps to technical failures.

One impacted category involves errors by healthcare professionals that occur independently of AI, such as deviating from evidence-based practices or failing to exercise sound clinical judgment. These missteps are traditionally viewed as the sole responsibility of the physician. However, when AI systems themselves produce incorrect recommendations, often due to poor data quality, flawed training, or overly complex algorithm design, the lines of liability become blurred. In such cases, the fault may lie with the developers or trainers of the AI system, especially if the algorithm was trained on biased or insufficient data that does not generalize to broader patient populations.

Even when AI is properly utilized, misinterpretation of its outputs, such as blindly accepting erroneous recommendations due to automation bias, can result in severe consequences. Ultimately, while AI enables faster, broader analyses of medical data, it still lacks the nuanced reasoning, empathy, and adaptability of human judgment. Misuse of AI can lead to over-reliance on guidelines that do not apply to the unique context of a patient’s situation.

As malpractice cases increasingly involve AI systems, establishing causation and liability will require both a technical understanding of the AI involved and a deep appreciation for the human factors that continue to play a central role in patient outcomes.

Why This Matters for Medical Malpractice

Medical malpractice attorneys must be prepared to navigate an entirely new set of considerations when AI is involved in a client’s care. Whether representing a plaintiff who suffered harm due to an AI-assisted misdiagnosis or defending a provider who relied on a faulty algorithm, attorneys must understand how these tools work, how decisions were made, and what documentation exists to support or challenge a claim.

Evidence collection may involve reviewing not only medical records but also algorithmic outputs, model explanations, and data sources. Experts may be needed to testify on whether the AI tool functioned as expected, or whether its use deviated from accepted medical practice. Most importantly, attorneys must anticipate that the standard of care in AI-assisted medicine is still in flux, and that courts, juries, and regulators are just beginning to grapple with what accountability looks like in this new era.

Staying Ahead of the Curve

Artificial intelligence holds tremendous promise for improving healthcare, but it also introduces new risks that could reshape the legal framework of medical malpractice. As AI becomes a more prominent player in medical decision-making, it challenges traditional notions of liability, ethics, and professional duty. At McCune Law Group, we are committed to staying at the forefront of these changes, advocating for accountability, transparency, and fairness in the evolving world of AI-assisted care.

To learn more about how our attorneys can help you navigate such complex landscapes, call or fill out our online form to schedule a free evaluation.